Episode Transcript

Speaker 0 00:00:00 Great. So, uh, it is seven o'clock. Uh, thank you for joining us today. I'm Scott Schiff, hosting Atlas society, senior fellow Rob Tru. Zinsky talking about objectiveism and futurism. Uh, I'd ask everyone to share the room and please raise your hand if you wanna join the conversation. Uh, Rob, thanks for doing this topic. What can objectiveism tell us about the future?

Speaker 1 00:00:27 Okay, great. So, um, I, I decided to breach verse this one because, um, I mentioned the idea of futurism the other day and somebody asked me, well, what the heck is futurism? And I thought, well, that's a good question because it's one of these things that tends to be sort of used in El loosey goosey way to, you know, I guess make any somebody's visionary, new book, allegedly visionary, new book sound, sound good and exciting and make them sound like the visionary they want to be, whether that's actually true or not. So I wanted to talk a bit about what that means, but also why do we need such a concept? What's the purpose of, of such a thing. And then what does it have to do with what does philosophy have to contribute to that? And what does objectiveness specifically have to attribute? So let's start with what is futurism?

Speaker 1 00:01:12 So I'd say what is futurism futurism? I described as attempts to predict and explain changes, do to advancing technology and specifically changes to the way we're going to live changes to our lifestyle changes to, you know, not just, you know, the, the newest model of car will have a couple extra features, but things that are actually going to make a substantive difference to the way that we live and work and play and, and what our lifestyle is going to be. Um, so it's, you know, how technology will change the way we live. So attempt to predict, and to explain that, all right, now, obviously there's an overlap here with the role of philosophy. Cause it's talking about what, what effects, how you live, how you live your life, what kind of life you have, what's possible to you in the world. And, you know, once approaching it from the technological perspective and the others approaching it from this sort of wider perspective of what what's possible to human beings, you know, given the nature of reality, giving the given human nature what's possible to us and the others saying, okay, but then very specifically given this new technology, what's that going to do for, you know, those all sorts of examples, just go to the past.

Speaker 1 00:02:25 Cause I go, I'm gonna go to the past. Those, there are things that aren't, somebody's visionary dream, but there things have actually happened. Right? So for example, if you look at the way transportation has changed over the last, uh, 200 years, right? With, uh, first railroads and steam ships and, uh, automobiles and airplanes, people are, are intent and are, are orders of magnitude more mobile. We're more casually mobile than we used to do. And it has changed how we live and how we look at the world. Um, you know, I think, think it's kind of interesting. Think when I moved to Virginia, one thing I noticed is that if you go on any of the, any of the roads around here, every five miles, you see some little, some little sign for a place, a town, a, a village or something like that, that doesn't really exist anymore.

Speaker 1 00:03:14 There's a couple of old buildings, but you realize that, you know, 200 years ago when this was all settled, see, I didn't, I wasn't used to staying in this, in the, in the Midwest because in the Midwest you go back 200 years and there was nothing there, right? It was, it was Prairie. Um, uh, but you know, 200 years ago in Virginia, there was something here and every five miles, there was a little town. Oftentimes they have a name like station, meaning there's a railroad station there. Or like Kent's store is one nearby or Holly's store is another one nearby. So there's a store or a station. There was some reason why people would go there, but they're every five miles. And I realized the reason they were every five miles is cuz that's how far you could travel, uh, by foot or by horse, uh, within, you know, as a day trip, you know, to go in.

Speaker 1 00:03:59 If you had to go in to buy something at the store and come back, you couldn't really go more than, than a couple of miles there and a couple miles back, uh, it was unrealistic to go travel any farther. So obviously the technology of transportation has radically changed how we view that sort of thing. I go about 30 miles to go to go shopping for groceries these days. Uh, and which would've been inconceivable back then. All right. So that's part of the story. Um, so it's, it's looking at, you know, the question of what's possible demand. What is possible to us? How, in what way should we live and what way can we live? But from a more specific angle than the philosopher looks at it, which is, you know, how is specific technology going to change that? Now here's the problem though, in my experience, most, a lot of futurism that you read out there, uh, if you go to a technology site or, you know, this whole, the whole genre was sort of kicked off by Alvin Toler, uh, in the sixties and seventies, when he did something called the third wave about how information technology was going to change everything, which he was right about, you know, but, uh, uh, a lot of the futurism that you see these days, if you go to technology sites is sort of a projection of various fantasies fantasies in the future, based less on what real technology is actually doing or, or, or, you know, near future technology is actually doing.

Speaker 1 00:05:23 But more based on the philosophy, the existing philosophy of the person who's writing it and what he would like to be true, what he would like to have happen. The biggest example I saw this, so my, a little, my background on this is about, oh gosh, about six or seven years ago. Now I, I, I, I started and, and, and ran for a while, a site called real clear future, which is one of it was under the real clear umbrella. Uh, and eventually the whole, yeah. And I did the site called real clear future, which was basically talking about immersion technology, talking about stuff that was 5, 10, 15 years down the road, you know, are we gonna have fusion power? Are we gonna have self-driving cars? That kind of thing. I, you know, it was basically what Elon Musk got up and tweeted something today. Was it, was it visionary or was it flip flam, that kind of thing.

Speaker 1 00:06:12 And, um, and, and when I wrote about that, I, with the main thing that, that got, that, that struck me when I started sort of surveying what was being done in this field is there were a lot of these things that were out there that people were saying and repeating over and over again, that didn't have a whole lot of basis and facts, but they had a lot of basis and wish and wish fulfillment fantasy, right? So one of em says, like for example, electric vehicles and fossil fuels, fossil fuels are on their way out electric vehicles, electric. Power's gonna totally replace them. It's all gonna happen soon, cuz it, you know, it's really, it's, it's, it's just around the corner, it's all gonna happen soon. And there was not any really great basis for believing this. Um, uh, but you know, it be, it became the fashion because the people's preexisting commitment to environmentalism, to the global warming theory.

Speaker 1 00:07:04 And so if they wanted to be technologically optimistic and imagine a, a prosperous future or an a, an advanced future, they had to say, oh, well then therefore it's all gotta be electric vehicles and solar power and it's not gonna, and fossil fuels are on their way out, but it was all a lot, you know, 90% of that was way more wish fulfillment. Uh, you know, I wish this would be the result rather than actually having strong evidence that that's, that that's, that that's already happening or it's likely to have. Uh, another one is, uh, somebody you may have heard this, a guy came out a book called, came up with, I think a book called fully, fully automated luxury communism. And this is the old dream. It's like not even a new thing that somebody's come up with that we're gonna have automation and, uh, artificial intelligence and, and super automation of all production.

Speaker 1 00:07:53 It's going to come along. And the machines are going to do all the work for us as, so we could finally make communism work. You know, it didn't work the other 700 times we tried it now we're finally gonna make it work because the machines will do all the work and we can all sit back and, and equally share in the rewards and, and have the ideal communist society. And it'll be luxurious. It'll be advanced, it'll be, uh, highly wealthy and it'll be fully automated cuz the machines are gonna do any of everything. And of course there's no reason to believe that this is imminent, much less, you know, little reason to believe it's practical at all. No reason to believe it's imminent. But again, it's that wish fulfillment of, you know, we tried communist of 700, 700 times maybe now this 701st time, you know, the technology, the robots will come along and the technology will help us make it work.

Speaker 1 00:08:43 So a lot of futurism does tend to be people taking their preexisting philosophical commitments and sort of engaging lesson in scientific analysis than in science fiction where they sort of project what they would like the ideal future to be, and sort of fudge all the fudge over all the details. All right. Now part of the problem with futurism is that, you know, the old saying predictions are hard, especially about the future, right? So it is very difficult to make predictions about what is going to happen five years from now, 15 years from now, what kind of power supplies are we gonna have? Uh, what kind of, uh, machines are we going to have or not have, you know, people like me have spent our entire lives waiting for the flying cars to arrive. Um, and we went past 2015, which is why when we had flying cars and back to the future part two.

Speaker 1 00:09:38 And uh, we went past 2019, which is when we had flying cars in a dystopian future and blade runner. It was at dystopia when we had flying cars in 2019, didn't happen, right? So there's all sorts of predictions that, that end up being wrong. And so that, because the prediction it's, it's inherently kind of a speculative field, I mean, you provide that much scope for speculation. You provide a lot of scope for people to read their wishes into the future rather than, you know, have a clear eye view of, of what's actually happening. Um, on the other hand though, it's not totally a lost cause. Cuz there have been some predictions that are quite good. I suggest you go online. You could find these is a set of at and T ads from the 1990s and what makes them extra delicious is they were the way they were published.

Speaker 1 00:10:27 I think it was in Newsweek. One of these big news magazines that no longer even like as a shadow of its former self. One of these news magazines I think is probably in time that they publish it as an insert, but it was an insert on CD rom. So <laugh>, you know, to show how futuristic they were, they were putting it on, on CD rom, which is a technology that has, you know, been and gone, uh, since then. But it was, it was a series of ads narrative by Tom sell like from at and T talking about all this amazing new technology that was on the way and you know, things like you're gonna be able to send a fax from the beach and you're going to be, it shows somebody with basically a, a, a, um, a tablet computer, which, you know, didn't really exist then, but you know, they were projecting the future.

Speaker 1 00:11:11 Somebody, the tablet, computer, you know, sending a fax, uh, from their chair, uh, at, at a Beachside resort. Now, you know, there's some unintentional irony there that yes, you could do exactly what they're talking about. You could send somebody a PDF of a document from your tablet on the beach, but who uses the facts these days, right? Um, or, uh, uh, you know, things like automated pay, uh, automated tolls, you know, you can pay a toll without even stop without even stopping. We do that now, you know, you can do that. If you get this little, uh, pass thing that you Mount on the inside of your windshield, you can do that in Northern Virginia on, on the beltway. Uh, uh, and so there's all sorts of things that this thing predicted and got right, that were technology that was 5, 10, 15, 20 years off in the future from, from when they made it now.

Speaker 1 00:12:02 So it, it is possible to make some projections based on what's going on with existing technology, about how things are going to change. Now, it's, it's, it's possible to make very broad predictions, getting the specifics right. Is very difficult because, you know, the, the most ironic part of that whole ad campaign is that each one of them ends with this tagline and the people who will bring it to you at and T. And of course they didn't bring all that to us. Somebody else, you know, they brought some of it, they provided some of the telecommunications infrastructure for it, but, you know, if, if you thought, oh at, and T's gonna be the one basically providing everything that comes on the internet today, well, no, it ended up being provided by, you know, a hundred other different people. So, you know, it's easy to get sometimes easy to get the, uh, it is possible.

Speaker 1 00:12:50 I should, I shouldn't say it's easy. It is possible to get the broad outlines, correct. While getting all the, a lot of the details wrong. Um, now, so the question that if, if it is possible to do this and it's, if it's possible to make these sort of predictions or speculations and not be completely wrong, the question is, why is it necessary? What's the value of this? Why should we be doing this? Well, a couple, two things, two main things. I think cuz one is, it helps us to prepare. And this is my big, uh, sales pitch. When I did real clear future of why you should be thinking about this is if these changes are coming, you know, if you have 5, 10, 15 years of warning, that these things are happening, you get to prepare for them. You get to realize what's going, you know, you, you realize ahead of time, what's going to happen and you can position yourself to be in the best situation to take advantage of it.

Speaker 1 00:13:40 Now, not in this very specific sense of, oh, I'm gonna buy at and T stock, cuz they're gonna bring me all these things cuz you, you could be very wrong about that. But more in the sense of if there is, if we are in an age of increasing automation and it is possible to automate things now, today that people couldn't automate before, then you should be, you know, if you are in a, uh, a career or a job that can be automated, it's good to know that ahead of time, right? Because you know that, you know, my sitting around filling out forms, uh, that can easily be done by a computer. That's gonna be a job. That's a dead end job. I better look to acquire skills, uh, or, you know, go into some sort of management position or, or do something so that I'm not the one doing the replaceable thing.

Speaker 1 00:14:28 I'm the one doing something that is not re uh, easily replaceable. Uh, now the second thing, the second reason why futurism is helpful and necessary is that the future hasn't happened yet. It is still being shaped and it's being shaped by us. And so one of the things that futurism can help us do in the same way that science fiction has done this in the past is it can help us shape the future by pointing out the possibilities. And, you know, in science fiction, the great example of this is star Trek, where, you know, practically every, every future technology that, you know, a whole bunch of the technology we have today, we're first seen <laugh>, you know, uh, 30 or 50 years ago, uh, a little more 50, I think in, in a star Trek series, you know, like flip phones, you have been and gone <laugh> they, they were the first, uh, in the original star Trek series, tablet, computers, um, you know, the Google guys talk about how they want Google, uh, the Google, um, search function ultimately to operate the way the star Trek computer does, right?

Speaker 1 00:15:31 Where you, you ask it a question and it gives you an answer right, as if you're talking to a person. Um, and so, you know, these things can imagining the possibilities of the future can help give direction and inspiration for the people who are actually developing the technology and making it happen, or for people who are finding uses for this technology, I've tried to imagine how, how could this help us help us live differently? And, and, and the, the futurist could help direct that. Now futurism, that goes wrong though, can also have a deleterious effect. And this is sort of, I did a lot of this sort of shooting down of this dystopian, this very popular in futurism today. And primarily it's about machines, right? Machines and artificial intelligence and robots. And every single movie you watch, there's a killer robot. That's going to become Senti.

Speaker 1 00:16:20 It's gonna kill us all. Um, and, uh, was it, uh, uh, you know, the famous one of course is Terminator, but in, you know, Marvel did Tron and, um, there's, there's too many examples to, to even try to list here. Uh, I was at disappointed actually in the, in the Picard series of star Trek that they did a couple years ago, that one of the plot points there is that they had artificial. Uh, they had basically Androids or robots building, uh, uh, Federation ships on the shipyards on Mars. And the, there was an uprising by the, uh, by the Androids. And they, they, uh, uh, they, they fought against us and had to be defeated. And that's like this whole background for the series. Well, so it's like even, even the famously optimistic tar shark series had to have a sort of mini robot apocalypse, uh, storyline.

Speaker 1 00:17:14 And one of the problems with that is when you have this relentless negativism about that, it can actually work to suppress innovation, uh, in that field. And I think, you know, I talked before about environmentalism leads people to say, oh, you know, we're not gonna, we're gonna no more fossil fuels. We're gonna be doing this. We can't do that. Environmentalism is one of the things it's a big drag, this sort of environmental doom saying is a big drag on innovation and growth. And so you can actually prevent some of the future from happening because you are convinced of a DYS of a, of a dystopian, uh, outcome that doesn't really have evidence to support it. All right. So that's why, what futurism is, that's why it's something worth talking about and worth going into, and I would say just a few words and then leave the rest up from, for discussion about what objectiveism has to add.

Speaker 1 00:18:04 And the way I put the question is, is objectiveism a philosophy of the future. And of course the answer is hell yes, objectiveism is a philosophy of the future. It is an optimistic forward looking pro growth, pro technology, uh, pro uh, pro science and, and, and pro innovation philosophy. Um, and there's three key ways I think in which that's the case. Now, the thing about objectiveism that really strikes me is making it the philosophy of the future is I talked about how earlier about how a lot of futurism is somebody projecting their preexisting philosophy onto, you know, future events, uh, and you know, their environmentalist philosophy, their anti, their sort of Ludism, you know, that the robots are going to kill us, et cetera. And what struck me is most philosophies far from being ready for the future, haven't even caught up with the past.

Speaker 1 00:18:58 You know, they haven't even reckoned with, uh, the, the indu, they haven't finished reckoning with the industrial revolution, much less what, you know, the information age, and what's going to come next. So what, there are three things I think objectiveism has that can help us learn. All right. So I mentioned, you know, they haven't, most philosophies haven't even reckoned with the industrial revolution. Well at, uh, um, objectiveism and iron Rand are huge exception to that in that she looked at that history of the last 200 years of the industrial revolution and drew a whole bunch of crucial, um, well shoot 50 years. But the point is that, uh, she drew a bunch of crucial conclusions from that the primary one being the importance of reason as the source of wealth, that wealth wasn't created by guys twisting bolts in factories, it was created by people sitting around and thinking up new ideas.

Speaker 1 00:19:53 Well, in an age of increasing automation and the automation is not really new, we've been, you know, we've been in age of automata automation since the beginning of the industrial evolution. That's what the industrial evolution was more and more human tasks being automated. And so she drew the conclusion from that, that, well, if all these human task tasks can be automated, uh, by somebody coming up with a new invention, a new machine that does it, then the real source of wealth is the person who comes up with the idea. And that's a huge value in terms of the role of automation today, cuz that's sort of what is a flood tendency that, you know, leftover from the industrial revolution that, oh, the robots are gonna come along. If they're gonna take all of our jobs, we're all gonna be poor. So we have to have university universal, basic income, or we have to have this, or we have to have that in order to prevent us from all being, you know, basically left poor and destitute because the robots are doing all of our, have taken all of our jobs and what iron Rand is saying.

Speaker 1 00:20:53 Well, this is, is basically this has been true all along. This is the basic nature of production. Then it comes from ideas and not just from physical labor. And so the way to deal with the onset of automation is to be the, in the idea business, to focus on what can't be automated, which is, um, uh, uh, conceptual and creative thinking. And that's the real source of wealth. And that it's, you know, if you're here engaged in, in sort of rote rep, any job that involves rote repetition or PHY or mere physical action is going to be automated. So you should be in, you should as much as possible, you should be in the job of doing the thinking about how things are going to happen of doing the conceptual and creative thinking that actually drives the economy. And which is a good idea, whether there's any automation or not, uh, or whether there's more automation than there is now or not.

Speaker 1 00:21:48 The second thing is, and I talked about this a couple weeks ago, when a thing on a post on post scarcity economics, which is the effect that altruism has as again, a, a, a sort of, uh, a dead idea or an idea that has been surpassed by events, but which still has this great purchase in people's heads and causes them not to understand what's happening in the present, or what's gonna be happening in the future. And so this there's still this assumption of a, kind of a zero sum society where somebody has to be sacrificed for the sake of somebody else. And that's what a lot of people do. They say, well, we're gonna have automation, but that means that everybody's gonna be out of a job. Only a few people are gonna be wealthy. So we have to have mass redistribution and government controlling the economy in order to, to deal with the fact that, you know, they saw this idea that, that, that if huge amounts of new wealth are being creative about automation, that must mean as being taken away from somebody else.

Speaker 1 00:22:49 And that I see fundamentally as the philosophical influence of altruism as a philosophy, which says somebody sacrifices always necessary. Somebody sacrifices always required. And it's a matter of whether you're sacrificing yourself to others or others who sacrificing themselves to you. And so it tends to see this zero sum view of the world in which if huge progressing and, and, uh, huge creation of wealth is happening, that somebody's gotta be impoverished. And so therefore we need to have government come in and we need to have these interventions to prevent that from happening. Now, the third thing is I think objectiveism is for, you know, tying into some of the reasons we just talked about in the first two, the belief and reason, the belief that self-interest, that people acting by their mutual self-interest can, uh, make everybody better off that chases, a way the dystopian in favor of an optimistic and benevolent view of the future.

Speaker 1 00:23:46 And objective, this has a benevolent view of the future because it has a benevolent view of human nature and of the nature of the world. It has, we have the benevolent universe premise. We view human beings, fundamentally as people who go out and come up with new ideas and do new thinking and come up with new innovations that make life better, which is sort of an antidote to this sort of reflexive dystopian. You get everywhere else where change is. And, and technological change is always viewed as well. But what about, you know, all our information's gonna be stolen by big corporations or, uh, um, uh, the robots gonna take all of our jobs or the robots are gonna come kill us. If there's every time they see an innovation or a change, people think immediately go to what's the worst thing that could possibly happen.

Speaker 1 00:24:30 What's the dystopian result. And of course, history shows, well, that's not what happens. We don't go straight to the dystopian results. We've actually had, you know, hundreds of years of, of, of progress. So the big, last big, the third big thing I wanna add there is that, um, objectiveism views, achievement and technology and innovation such to be celebrated and not feared. And those, those are the three big things, the emphasis on, uh, on, on reason and specifically meaning, uh, uh, uh, creative and conceptual thinking, the emphasis, the, the rejection of altruism and the idea that, you know, if, if we, that, that, um, increases in wealth, don't have to impoverish everyone. We can all work together by mutual, uh, consent and to mutual advantage. And then third is this benevolent view of the universe and man's place in it that creates an optimism and chases the way that dystopian of a lot of today's future. All right. So with all that said, which I went longer than I expected, uh, but I have many thoughts on this. Uh, I'm gonna open it up and, you know, people to add questions, comments, disagreements, et cetera.

Speaker 0 00:25:41 Great. Yeah. We'd encourage anyone that wants to join to raise your hand. We'll bring you up to the stage. Uh, I've got a lot of questions I've been, uh, working on as well. Uh, as you've been talking, one of the first ones is, you know, Rand talks about the role of intellectuals and, uh, what, um, you know, how to is, is the main role for intellectuals to help prepare people for these technological changes so that they're not enemies of the future.

Speaker 1 00:26:11 Oh yeah, absolutely. Well, you know, the, the, the way I like to put it is the role of intellectuals is supposed to be, to explain what's happening in the world and not to explain it away. And a lot of times you get, you know, the role of intellectual these days is to, to explain away what's happening in the world, but saying, well, no, no, no, that's not really what's happening. Um, and I think that's what they've been doing since the beginning of the industrial revolution explaining away the industrial revolution. I mean, you know, uh, the, a great example of that is Carl Marks, right? Who says, no, no, no, no. See this great liberation of labor that's happened in the industrial revolution. It's actually just the same old system. It's wage slavery, they're robber, Barrons, nothing has changed at all from the middle ages in ex actually it's, it's worse in the middle ages. Does he had Jetta, uh, tends to have a Rosie and romanticized view of how much of, of the middle ages of the economy of the middle ages. So, you know, a lot of this is people explaining away. So yes, absolutely. By explaining what's already happened and then anticipating, you know, the future of that it's to prepare people for what is going to be happening and what's going to be required to serve, you know, uh, survive and thrive, uh, in, in the future.

Speaker 0 00:27:29 Thank you. Go ahead, Marcus.

Speaker 3 00:27:32 Hello guys. How are you? I'm sorry, I interrupted before. I just didn't know how this worked. So, uh, yeah, very, uh, interesting what you have said, Rob and I, I very much agree with most of your, uh, uh, points, uh, but, but my, and, and this is an issue that I also think about a lot. And, and my, my, my idea is we have never being actually able to, to, uh, um, completely fulfill this idea of the enlightenment. Ultimately, I think, I don't know if you guys would agree, which is, you know, what you say in principle, you know, the, the freedom of the individual, the ity of the individual. And, uh, and then that society can be, uh, a community of the commons, if you will, rather than something imposed, et cetera. And, you know, and again, everybody pursuing their own interest can generate wealth.

Speaker 3 00:28:34 So it's not a zero sum game istic kind of thing, uh, the economic game, but, but <laugh> have never actually quite, I mean, I think this is an idea that's, that's been around, as I said, since the enlightenment, and we have never quite been able to, uh, uh, to implement it obviously, but we have gotten much closer and we are definitely ways better. I don't know if you're, you guys are familiarized with the work of a Pinker, Steve Pinker. Uh, yeah. So, yeah, so I mean, I definitely think along those lines and, and, you know, but, but in spite of that, you know, you still have the, the industrial revolution was profoundly dystopian in his beginning. I mean, we, we only ripped, I mean, only it was, it was only of benefit to the minority in the long run. I don't know if I, I, if you guys would agree with me and I think that there's data and this, you know, it took several decades, you know, until the average wealth, for example of England, you know, increased and, and, and, and if you measure things at like personal happiness, then it will get maybe even a little more complicated, but in any case, my point is then that happened, then you have the, you know, the phenomenon of the world wars still.

Speaker 3 00:30:11 So, so, and, and, and, and there was a very strong movement of thinking along this line, by the end of the 19th century as well. I mean, you, you read some of these guys, uh, UUs, Bernie will be, you know, one of the most prominent examples. So there's, uh, uh, do

Speaker 1 00:30:30 You wanna give Robert,

Speaker 3 00:30:31 I, I, I completely agree with you what they should strive for that, but things can go wrong. Let's put it this way, whatever can go wrong will go wrong. Given enough time that's morphs law, I think. No. So, so, so we should be, I mean, the, the skepticism should be very, uh, uh, prevalent whenever we're going to take any of these steps, but again, I

Speaker 1 00:30:56 Okay. Marcus, you wanna give a chance answer. Yeah, well, that, that though, these, this is all great stuff. I'm, I'm happy you brought this all up. Okay. So one, one small quibble, which is, I'm not as certain about the issue that, you know, um, I, I do think, you know, the, the adjust revolution took a while to take off and to have a really powerful effect on, uh, people's wellbeing. I mean, as any, um, as any geometric growth, you know, any pattern geometric growth, you know, what does it look like at the beginning? You know, but relative to later, uh, growth, it looks it, the beginning part of the curve of the upward curve, uh, looks small, looks almost nearly flat. It it's kind of indice discernible to growth, and that suddenly it explodes. And that's kind of what happened with the industrial revolution, but that was, you know, it was, the growth was happening at the beginning.

Speaker 1 00:31:47 It just didn't become as starkly noticeable until you got it had a couple of decades to compound now would also say, is that you had a mass Exodus and the industrial. One of the things that, that, that fed the industrial revolution is you had a mass Exodus of people from agricultural jobs into the cities, into, in, into industry. And a lot of that wouldn't have been happening if living on the farm, if living out in the agricultural economy was so much better and more fulfilling than them working in the factory. So, you know, it's clear that at the time, this was something that was actually conceived by the people who were doing it. Nobody was rounding them up and forcing them to work in the factories. It was conceived by the people who were working there to be a better option than what they have.

Speaker 1 00:32:31 And I think a lot of this look back of, oh, look how dystopian the early, uh, industrial revolution was, is like, well, yes, it was absolutely dystopian by our standards today, but everything was dystopian by our standards today. Everything was, you know, life was pretty miserable for everyone by our standards today. And on a lot of cases, you know, life out on the farm, uh, life working on, you know, in, in, in manual labor and agriculture in, you know, 1795 was even worse than life working in the factory. All right. So that's, that's, I'm not, I'm not a great expert on that, so I don't wanna get into the weeds, but I think that's just something you think about in

Speaker 3 00:33:14 Yes. Yeah. Completely agree in that regard and to a great extent, the, the, the immigration into the, into the cities in that time must

Speaker 1 00:33:24 Remember, start out a little bit. I think he was still answering. Just finish sentence. I'll get to back to this. Oh, I'm sorry. I'm sorry. We have not fully implemented division of the enlightenment, but having partially implemented it is pretty darn good. You know, and, and you're always gonna face resistance. And like you mentioned, the world wars, you know, you're gonna have people who harken back to this romanticism of a pre-industrial pre technological pre rational age, which is very much what, what motivated, you know, the fascist, the fascist had this, I mean, is romanticism in that, in the philosophical sense of like German romanticism, this belief in worship of emotions and blood and soil, that was a very anti enlightenment viewpoint. So we, we still have, I think, an ideological contest at a philosophical contest going on. And that's why we, we have not, you know, fully implemented this because first of all, the, the envision enlightenment was kind of vague. They didn't really know what all it would entail. We had to figure, find a lot of that stuff out, figure it out as we went along. And also because there's always going to be this philosophical contest of people who, you know, they dislike the enlightenment because it doesn't leave enough room for religion, or it doesn't leave enough room for, for blood and soil or whatever it is that they want to, you know, nostalgically keep about the past. So anyway, you go back to Marcus,

Speaker 3 00:34:43 So yes, yes. Uh, uh, much, very much agree with you. The, the, the movement of, of the, of the, uh, peasants into the, uh, urban centers in the beginning of the industrial revolution, remember that it was driven also to a great extent, because there was an agricultural revolution preceding that that very much pushed people out of the countryside because the, the farming was becoming so efficient that you needed much less people to farm. And then those people literally had nothing else to do than going, but, but in any case, I fully agree with you very, we very much have done progress, but my point even before was that along that progress, there are big bumps and potential big, uh, uh, setbacks. So my, my point was that we should be very much aware of that because since our technology also has, can, can potentially have a, a very much more disastrous consequence if it goes wrong, black mirror, only one <laugh>.

Speaker 3 00:35:46 And, and, and, and, and we remember we also escaped a nuclear war by the skin of our teeth when you, when, when you look at it and we, we still not done with it. So my point is completely agree with you. We should strive for, for the, uh, for the ideas of the alignment, everything you described at the beginning as a compass to which to Wayne for, but, but the big warnings are there, like the same massive phenomenon that you, that you just described, which, which coincidentally, they saw themselves as something very advanced for at times as well. You know, it was very weird, very no nonsensical idea to you, but let's not get into that. The idea is that, uh, uh, yeah, so we, we, we should be aware. We, we should be aware that we are, we're playing with fire

Speaker 1 00:36:37 <laugh>. Yeah. One thing I think also is, um, one of the lessons I think of futurism is if, of, of sort of looking at how future technology develops is that I think the lesson of world war II and the cold war, nuclear weapons, and all that, the lesson of that is that strong man dictatorship and totalitarianism are not compatible with the future, right. <laugh> that, you know, in places, you know, without the totalitarianism of the Soviets or of the, of the, uh, of the Nazis, very

Speaker 3 00:37:08 Important point,

Speaker 1 00:37:09 You know, this technol, none of this technology would've produced the, the, you know, the, I mean, the reason they made the Nazis such, uh, such big villains is that they took over an advanced industrialized, uh, technologically, uh, competent country. You know, they had all, they had lots of great engineers, they had a verte bra and all those guys building rockets. So, um, you know, a country with high technology and advancing technology and, and all that is, is 10 times more dangerous when it becomes totalitarian when it adopts the, when it goes backwards in its ideas. And so that's one of the other warnings is that, you know, I think it makes me kind of a George bushy and, you know, said his goal was to end tyranny in the world by, by the end of the century. And I'm like, yes, absolutely. We need to, as a matter of survival, because God knows what technology we're gonna have in 2100, that we don't wanna be in the hands of these lunatic.

Speaker 1 00:38:08 Anyway, that's just getting, um, you know, that, that's a kind of, um, intellectual thing that comes up about the future is, is another dark ages possible, or are we too technologically advanced for that? Well, I, I think, you know, it's a dark age agent happens. Dark ages are, uh, back in, back in the day, even when, you know, the actual dark age of, uh, uh, of, of the middle, you know, the middle ages or the, the early middle ages, uh, by the late by the late middle ages were, you know, making huge advances again. Uh, but there's a period of like 300 years in there where things are really, the dark ages were really dark and wherever, basically, basically everything that was built by classical civilization was being torn down and not really anything was being built in its place. And, uh, but all tar cages are local.

Speaker 1 00:38:56 Uh, that's one of the lessons of history, which is that now they can be local in the sense of most of Europe, right. But while, while civilization was being torn down, uh, by barbarian invasions and collapsed, uh, in Western Europe, you know, the busine empire was still there and maintained a large zone of control. And so my hope is that, especially with today's advances in communications and, you know, back then the idea that, you know, the, that Western ideas would reach as far as China or someplace like that, or, or, or east Asia would be ridiculous. And of course now they have reached east Asia, they're widely known and, and, and, uh, to some extent adopted in various countries there, uh, maybe less so in China these days, but even there, it has an impact. So I'm very, what makes me optimistic about the future is that the, the technology and the scientific knowledge, but also some of the philosophical knowledge, some of the enlightenment ideals, uh, ideals, and some of the, uh, political ideals have already spread and been adopted throughout a huge portion of the world.

Speaker 1 00:40:01 So if we, you know, uh, Ronald Ronalds Reagan used to say at the end of the cold war, you know, that, uh, he talked to, uh, a, uh, I think a Cuban refugee or something like that, who said, he felt sorry for him. He says, what do you mean? Why do you feel sorry for me? He says, because you have no place to escape to, right? So when communism came to Cuba, he could escape that guy could escape to America. But if America, if communism comes to America, if America's destroyed, there's gonna be no place to escape to cuz you know, the communists are gonna control everything. Maybe that was true in, you know, circuit 1980. I really don't think that there's, that that's possible today. Cuz there's, you know, if, if any, if, if, if the, if the worst were to happen in the us and we were collapse to become a dictatorship, there would be other places that would be able to take that to eventually take that leadership. There'd be other places where these ideas survive and where progress continues.

Speaker 0 00:40:55 That's fair. Um, let me ask you, is it fair to say that futurism can easily become utopian? Whether it's like heaven or equality of outcomes? I mean, people are, uh, kind of taking their eyes off the present to some degree. Yeah.

Speaker 1 00:41:12 Well, I mean the there's oddly a lot of futurism these days it's dystopian and when it's not dystopian, it does tend to be utopian. Right. <laugh> uh, and utopian in a sense now, you know, the original sense that you knows Thomas Moore sense of meaning, no place, right? <laugh> um, and utopian think it's a bad rap, cuz the idea is that when you do these projections, like I said, when you do something that's projection, based on your wish fulfillment of what you would like to see happen of the future, you wish would happen. You tend to create something that's, that's utterly unrealistic as a future. And one of my favorites on this is this idea is this fully automated luxury communist, some idea or various sort of water down versions of that like universal basic income where the idea is, oh, the machines are be doing all the work.

Speaker 1 00:42:00 You don't have to have a job anymore. And you know, you just, you you'll get paid a nice, uh, uh, regular paycheck by the government. You'll be able to live on nice an ice life without having to worry about work at all. And of course, you know, anybody who's ever managed a highly complex technological system <laugh> is gonna be able to throw through cold water on that, that actually some way the more complex a technological system, the more spectacular it can all fall apart. If somebody isn't monitoring it and fixing it and, and taking care of it all the time, if you don't have, you know, you actually require a whole new class of workers to, to, to manage an automated system, uh, you know, how many people work in, in it information technology these days, right? Uh, and this is the thing that associated so auto, you know, I, I found a great document from like 1964 or at 63 or 64 of these people talking about how, oh, we're headed towards this cybernetic.

Speaker 1 00:42:56 I don't think the, they didn't quite use cybernetic. They used some variation on that. That was like the term at the, the VO in term, in Vogue at the time. But the cybernetic future is coming where all this computer technology is gonna automate everything and we won't have to work. And therefore we should have this giant new welfare state. And they were writing this off to Lydon Johnson as part of the basis for the, uh, as part of the agitation for the great society welfare programs. And the funny thing is, you know, these people imagined that the, you know, the computer, the computerized society we live in today was all going to go automatically and nobody have to do anything. And of course, you know, there are now at hundreds of thousands, at least I'm sure millions of people employed in the it business, you know, are doing all the work of patching together, all these, all these different systems that don't work automatically that need to be maintained and, and, and functioning.

Speaker 1 00:43:50 So that's where I see, you know, the sort of starry I utopianism and I think what drives it is it's, it's bad science fiction where people want to, they have a philosophical loyalty that they wanna somehow fantasize. It would all work. And so they creates science fiction about how it's all going to work without doing the hard work of saying, well, you know, how do these systems actually work? How are they going to function? Is this actually possible? I mean, if I could come to terms with the fact that I'm not going to get a flying car, I think maybe some of these people could come to terms with the fact that they're not going to get fully automated luxury communism. <laugh> they're still gonna try. I, I thought

Speaker 0 00:44:31 It was quite, um, it was good when you were talking about how these people, they just, basically, it seemed like you were saying, use their agenda, um, to, uh, JAG, if you wanna jump in here. You're welcome to

Speaker 1 00:44:45 Yeah.

Speaker 4 00:44:45 Uh, Rob, I was just looking at some of the questions that we got for our Instagram takeover and one was about accelerationism, which was a new term to me. But, um, I was wondering if, if that had crossed your radar and if you had any thoughts. Oh yeah.

Speaker 1 00:45:03 Well, accelerationism, I have thoughts about, but accelerationism is not as nice as it sounds. So you think, and the concept of context of futurism, you think, oh, accelerationism means we should expand technological progress. We should be, you know, doing all these things to, to promote. And at supercharge our tech, our growth in technological progress, that's not what accelerationism is at least not in the version I've encountered. And it's probably what this is about. Accelerationism in this viewpoint is we have all the contradictions to society that are leading to conflict and collapse. And so we should try to accelerate the conflict. And some of the people who do this are like your alt white, white nationalist types, who basically say, you know, the race war is coming or the American civil war is coming. So we should accelerate it. We should make things worse. We should, uh, increase the contradictions.

Speaker 1 00:45:51 And that's actually an old Marxist thing that they used to do, right? So there are all these inherited contradictions and capitalism. Capitalism will finally be torn apart by its, its own internal contradictions. So we should, they used to have a term and I can't remember the name, the, the, the exact wording of it anymore, but it's basically we should accelerate the contradictions. So we should, we should increase and exaggerate the contradictions so that the existing system will collapse. And then we can replace it with our ideal utopian version. And I think that's what accelerationism sort of means. And I, at my asso, my, I, my recollection is it's associated largely with the sort of the alt-right types, but it probably has a wider, you know, it's, it's a popular idea. The economist used to do it, uh, anybody in any, um, I mean, in a way John gold is an accelerationist in that sense, uh, you know, in that he say says, you know, the, the system is heading towards collapse, so let's collapse it so that we can then have what comes after it. Um, but in generally it's used by people who have less benevolent intentions, cuz he wants to say, okay, let's, you know, let's get the dictator, you know, we're collapsing this Tator ship. Let's get, let's knock the props out from under it, watch it collapse. And then we can have a free society. Again, a lot of the acceleration are people who have much less benevolent intentions.

Speaker 4 00:47:07 Yeah. I saw that there was kind of an alt right version of it, but also that there was a left version of it coming out, critical social justice theory. Um, but I thought it was interesting. I'm like, well, they're wrong because actually if we did increase, you know, uh, basically hyper capitalism, which is a far cry from what we have today, mm-hmm <affirmative>, um, that would be fine by me.

Speaker 1 00:47:34 Well, and the great thing is if we increase capitalism, I dunno about hyper capitalism or not. But if we increase the amount of capitalism, uh, it would lead to, it would not lead to like cataclysmic conflict cuz you know, a lot of these things are fantasies of let's have the big, final battle, the big final conflict. I think they're fantasies that we're going to go into the future by fighting each other, right. We'll fight each other on one side, we'll win. And that's how we'll, we'll get into the, into the, into the future we want. Whereas you know, capitalism, the whole point of capitalism is no, we're just gonna, we're gonna build a lot of stuff and that's how we're getting get into the future. And we're not gonna try to, we don't wanna fight each other. We wanna fight each other as little as possible so we can go build a bunch of stuff. And that's the sort of benevolent version of, I think the accelerationism we need is accelerate capitalism, accelerate innovation, accelerate growth, and let's get us, you know, into this science fiction future that we want to live in. I mean, you know, the good kind of science is future.

Speaker 4 00:48:32 Yeah, no I agree. Um, you know, their motives may be bad, but their premises are worse. And um, you know, if get board with, uh, reducing on capitalism then plays out

Speaker 1 00:48:50 That that's a, I, I was gonna talk about that. I think to some degree, um, that anyone with an ideology has their own vision of what inevitable victory looks like. And also the, you know, CATA, catastrophizing, uh, the, you know, if, if your opponent views get put into place. Yeah, yeah. I I've become much more personally and become much more of a gradualist. Cause I think one of the things take, we had a discussion about this, like a couple a while back couple months ago, but one of the things I take I've taken away from, you know, I've somebody asked, I'm familiar receiving Pinker. Yes, I've read, I've interviewed him, I've read his books. Um, and especially his book enlightenment now where he goes in this long litany and it's, it's like way too much detail and free slice cuz it's way too much detail.

Speaker 1 00:49:39 It's exactly the right amount of detail because you know, people are so pessimistic these days. They're so inclined to think everything's getting worse. They need way too much detail on how everything's getting better. And that affected me in one way, which is that it made me more of a gradualist, you know, cuz the idea is that, well, if everything has gotten so much better and I, what shocked me is you look at all, most of these graphs of economic material, even, you know, educational progress and all of that and huge things we think of as huge SMIC events like world war II are hardly uplift. They hardly register the progress, just keeps going. You know, it, world war II, a hundred million people killed, you know, the worldwide disaster and people, things being blown up and civilization just keeps going past it with hardly as like a speed bump.

Speaker 1 00:50:28 Um, and it made me realize that, um, you know, what we have is so much is going right, that I think we should be focused a lot less on the cataclysmic possibilities or of how you have to totally tear down the existing system and much our, our approach should be much more gradualist and focus on, well look, you know, can we make ourselves a little freer over here and make ourselves a little freer over here and just keep chipping away. And what you'll see is that as you do that, life continues to get better and it gets better faster. And you, you and, and you have this thing where instead of one caul that show down where you have to totally defeat your enemies, you just do one little thing at a time and things just get incrementally better and, and, and the results and the, the, the, the benefits compound, you know, annual compounding, you have add, adds up to huge amount at the end. And that I think I've become a little bit more of a gradualist in my, uh, idea of what the ideal political change looks like.

Speaker 0 00:51:32 That's fair. I consider myself an incrementalist too. I think what happens is that, uh, whether it's the administrative state or the squad, the lessons that they've taken from, from lockdowns or, or what have you, is that we can make huge changes. We don't have to be gradualist. We can go for the whole socialist, uh, you know, Kaba right now.

Speaker 1 00:51:55 But did they though, right? Everywhere I've been so far, you would think that the lockdowns in the pandemic never happened.

Speaker 0 00:52:02 Yeah. I,

Speaker 1 00:52:04 I mean, think about that. I, I, I like to use world war II as an example, cuz a lot of people got super excited in world, world, world war I and world war II. A lot of people got super excited of, oh this, this regimented system of command and control where everybody's everybody wears the same uniform and sleeps in the barracks and they all follow orders and they sacrifice and they re and they uh, oh, they call it recycling. But you know, they, they sell, they, they take their old newspapers and they bring them in to be salvaged and they, they accept rationing. Isn't this great. This is the ideal future. We have all this power. We can, we can now impose this system. And you know, they always try to find you, this is where the term, the moral equivalent of war comes from.

Speaker 1 00:52:43 Right. They always try to find out, let's harness this willingness to do this in war time. Let's harness this for our other goals over here and over there. And what happens is the minute the war is over <laugh> uh, at actually at least a little before the war is over, cuz this is a hard something to happen in like 44. Uh, everybody says, okay, great. Let's go back to normal. And uh, uh, there's a, was it the cold? Was it a cold Porter? I think it was a cold Porter musical, not at one of his better ones done in the late forties called call me Mister. And it was about the, the absolute joy and elation of these guys, you know, leaving the army and getting called Mister again instead of Sergeant or private. Uh <laugh>. And so, you know, and people just sort of went back to, they were very, very happy to get back to normal life after the emergency.

Speaker 1 00:53:31 I, I, I'm seeing the same thing with the pandemic. I think some people have gone, you know, this way. I, I think there are places where people are just, you know, they've just said, we're just gonna get COVID <laugh> and, and uh, they take few, few precautions, but it shows how resistant people are to actually, you know, this idea of, oh, people will accept all these radical changes. No, they won't. People are highly resistant to it and they, and, and it doesn't matter. Blue state, red state, they will go back to normal as soon as they, you know, as soon as they can, or sometimes sooner, if, as soon as it's socially acceptable, as soon as enough other people do it, that, that, you know, that, that you have this sort of everything flips. And that I think that's already happened with the pandemic. It happens, you know, sometime early this year,

Speaker 4 00:54:16 You haven't been to San Francisco lately. Rob

Speaker 1 00:54:19 <laugh> maybe I haven't, maybe there are a few places I out here in Virginia it's pretty much happened.

Speaker 0 00:54:24 That's uh, that's good. Hopefully the monkeypox thing won't get too bad, but I wanna welcome TAs founder, David Kelly, to the stage,

Speaker 1 00:54:33 By the way, I wanna say monkeypox is an awesome name for disease. <laugh> it's, it's like, it's like, it's like a 14 year old, thought it up. It's just like, go well, let's, let's come up with the really scariest out game for disease. I know. Monkeypox

Speaker 5 00:54:47 <laugh> all

Speaker 1 00:54:48 Right. So, so David, come on

Speaker 5 00:54:51 Laughing. Now. I, what, Rob, just, I just came in late, so I missed, uh, part of it. Um, but I, uh, you know what Rob was saying about world war II is absolutely right. And my father was a vet from world war II in the Navy. Um, and, uh, when the world was over, he couldn't wait to get his law degree, marry. My mother have children five altogether. And, um, you know, I, he was, you know, very typical of that generation, I think. So, yeah. Back to normal, but here's the question for Rob? Uh, I, I, I believe in incremental change too. Uh, we see it happening economically in business. Um, every advance in technology, you know, sponsors mm-hmm, <affirmative> further advances, but it works in politics too, I think. Um, and that's where we're, you know, the issue of gradualism comes up. The left is also working on gradualism and maybe not to the satisfaction of the squad, but they're working in a democratic system where they have to convince, um, a legislature to move forward, but they keep doing it, um, the built back better program, you know? Uh, thank, uh, but now they're bringing back key elements of it at what, 70,000, 7,700 something million. So they're getting, um, and they're, the state has been growing as we all know, uh, over decade

Speaker 5 00:56:25 Step by step. Um, and I wonder how, you know, we we've been fighting a resistance battle against it incrementally, so, so to speak, and I think that's all we can do, but what are, what are some strategies that we can apply that, um, would reverse the political creep of socialism?

Speaker 1 00:56:48 Okay. So lemme say two couple quick things about that though. You've asked a question that could take an hour to answer and we have four minutes left, so <laugh>, I'll, I'll do the briefest possible version of it. Maybe you'll do another, a future clubhouse on that. Um, I think of it as, as the new Fabians, uh, the Fabian socialist or they, they had this incremental graduals approach it's actually named after a general Roman general named Fabius who's, um, uh, was called the delay because his, his strategy was, he couldn't figure how to beat Hannibal. Uh, uh, you know, Hannibal was coming marching towards Rome. He couldn't figure out to beat him. So he ranged for a series of battles where he basically fought the battle and retreated fought the battle and retreated, and his job was just to delay Hannibal long enough that he would sort of run outta steam and it actually worked.

Speaker 1 00:57:33 Yeah. And, and so I think that's, you know, it's a much better analogy for what we're doing now than for the socialist in the early 20th century, um, where, you know, it's like, we're trying to, in a way, we're trying to buy time for capitals to keep growing and producing great new things, uh, by stopping one little thing after another and, and taking this giant build back better stimulus thing with all sorts of new government regulations and well, okay. Maybe they can get through a tiny, a small, much smaller, uh, version of it with a lot of the worst stuff taken out and a few of the bad things still in there, but a lot of the worst stuff taken out, but what we still haven't really figured out to do, how to do it. I think we just, it's, there's a, there's a, um, a critical mass you need to reach to be able to do this is we haven't figured out how to stop the one way ratchet, right?

Speaker 1 00:58:23 This is the idea that yeah, once a government program gets passed, you will never get rid of it. There've been like a handful of examples, like the interstate commerce commission, a handful of examples that actually were gotten rid of and abolished, but, you know, big disappointment to me was, was Obamacare. You know, we got a Republican president or Republican majority, both houses of condos. They promised us for six years, the big thing we're gonna do is we're gonna repeal Obamacare and they couldn't manage to do it. Uh, so, uh, that's I think the problem is that ideologically philosophically, you have to get a, a, a, a large enough critical mass to make it possible for you to rally public opinion for the much harder job of getting rid of something once it's been passed. Um, and obviously one of the, my, my disappointment with the Republicans summing from that is that they showed that, that they were still too committed to the welfare state and to big government, and didn't really want the responsibility of having to get rid of it.

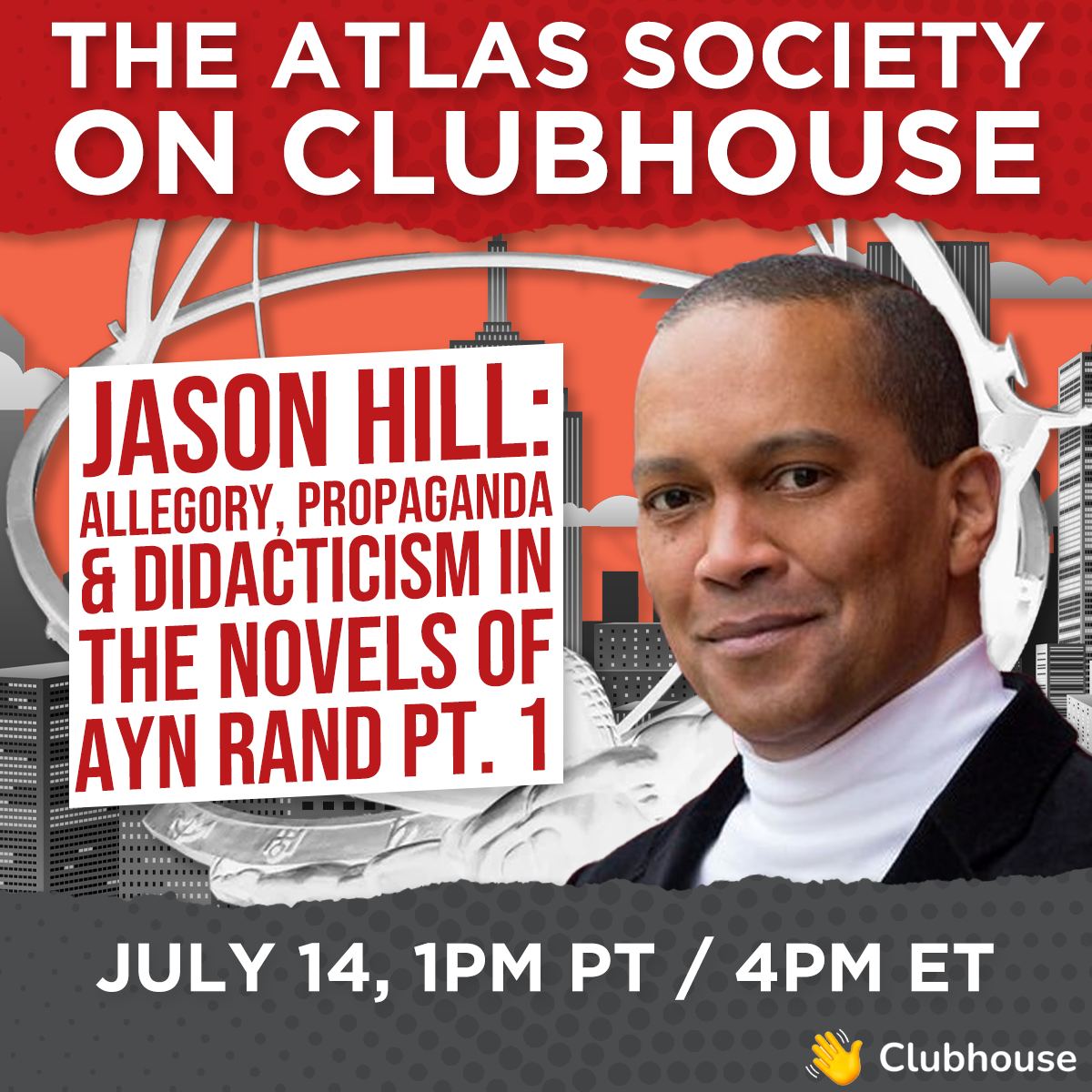

Speaker 0 00:59:26 Okay. I think I remember John McCain being one of the final votes against, but, uh, be that as it may, it was a great topic today. Rob, thank you for doing it tomorrow at 5:00 PM, the Atlas society asks features our CEO, Jennifer Grossman, asking Barbara Oakley professional, uh, professor of engineering about the state of education and learning Thursday back here on clubhouse also at 5:00 PM. Eastern professor Jason Hill will be here discussing the nature of political happiness, another fascinating topic. Uh, also, uh, September 2nd is going to be our second annual Atlas shrug day, uh, current date in the book, and it was a lot of fun. Last year, we broke out into breakout rooms with individual scholars, very interactive. So mark your calendars, it'll be on a Friday. Uh, great topic, Rob. Thanks for doing this. Thanks to everyone who participated JAG final thoughts.

Speaker 7 01:00:23 Yeah. Just wanted to add an extra note on tomorrow's interview with professor Barbara Oakley. In addition to having, um, produced the world's most popular, massive online, uh, education course on how to learn. She's also, this is partially what caught our eyes. She, uh, she's a co-author of a book called pathological Altru, which really was maybe one of the first academic books, um, to take a look at what are some of the downsides of, um, altruism and even empathy. So, um, I'm gonna be interested to hear her take on that as well as she has, uh, some interesting criticism of iron Rand. I, I think she kind of has an appreciation for objectiveism, but also says that some of maybe the, uh, that some great geniuses and visionaries, um, can sometimes have aspects of their personality, which help them propel their visions forward persevere against adversity and, um, conflict, but that also can have some, some negative, uh, sides as well. So to hearing about you,

Speaker 1 01:01:41 Barbara Oakley's work on pathological ultra, so I'm definitely gonna, I'm gonna listen to that. That sounds awesome.

Speaker 0 01:01:47 Cool, great. Uh, thanks again for, uh, everyone who participated, uh, hope to see you later this week. Take care.

Speaker 1 01:01:54 Thanks everyone.